The rapid development of e-commerce and intelligent manufacturing in the 21st century has put forward higher requirements for many links in the supply chain and warehousing. Autonomous mobile robots (AMR) can improve raw materials while providing high flexibility, high precision, and high reliability handling services. , The efficiency of finished product handling, to solve the challenges brought about by the rapid increase in labor costs, is widely accepted by business owners. Based on the difficulties of high-frequency changes in the environment in the intelligent handling scene of the warehousing industry, this article discusses the perception and positioning technology of mobile robots in the complex environment and designs a complete set of efficient and flexibly configurable software and hardware systems, and intelligent vision laser The integration of perception and positioning, planning and control systems ensures the stability of AMR operation in business scenarios.

Difficulties of intelligent handling in the warehousing industry

In the intelligent handling scenarios of the warehousing industry, human-computer interaction is complex, and the environment continues to change at a high frequency, which has a great impact on the positioning of Autonomous Mobile Robot (AMR) in the field, especially in the docking scene. Environmental changes seriously affect the perception of robots. , Causing problems such as inaccurate alignment. Ground mobile robots that use single navigation and positioning technology, such as magnetic stripe navigation and two-dimensional code navigation, cannot cope with these complex scenes. The storage industry needs mobile robots with powerful hybrid navigation technology to improve perception and positioning capabilities, so as to ensure stable operation in complex and changeable scenes.

The mobile robot is equipped with a variety of sensors including lidar and vision cameras. However, when facing the following scenarios or conditions, there are many difficulties in robot positioning, mapping, and motion control.

1.1 The scene is empty

In scenes such as warehouses and factories, there are only a few fixed targets such as walls and top beams, and most of them are empty spaces. In long-distance detection, the point cloud scanned by lidar will be too sparse, or the laser frame will sweep to the ground, forming interference data. The effective detection distance of the depth camera is generally within 5 meters (the long-distance noise is large and difficult to use). If the robot lacks fixed objects within a 5-meter radius of most driving paths, there will be no depth information feedback.

Although the camera can obtain image information far enough, it will be more difficult to extract visual features such as corner points and lines from far-away images. The depth information cannot be obtained by only relying on the pixels in the image. If the distance of the point is calculated, the camera needs to be moved and observed again, and the calculation is performed according to the principle of triangulation. And the farther the point is, the greater the error of triangulation, which will increase the positioning error.

1.2 High dynamics and large scene changes

In warehousing and manufacturing scenarios, objects are highly dynamic. Figure 1 shows that the left side is all carton boxes. With different tasks, the positions of these bins will change dramatically.

The feature points on the dynamic object will move with the object and cannot provide effective constraints for the robot’s positioning. At the same time, it is difficult to distinguish all dynamic objects from the background and remove all the feature points on the dynamic objects. If the feature points on the dynamic object are added to the map when building the map, the positioning will fail because the feature points that have disappeared cannot be matched.

1.3 Man-machine mixed traffic in the scene

There is large uncertainty in the movement of people, and it is very likely that they will suddenly appear on the driving path of the robot. Once a missed test occurs, it will bring danger to people. In order to ensure the safety of personnel, it is necessary to detect the presence of humans in multiple directions in real-time, and even to accurately predict the trajectory of human movement, in order to achieve safe and reliable robot navigation in the scene of human-machine mixed traffic.

1.4 High scene repeatability

For scenes with high feature repeatability, for laser positioning, local features will be repeated many times during driving. Moreover, most of the objects in the effective range only have similar-shaped features such as walls and pillars. The lack of unique features will lead to difficulties in positioning, as shown in Figure 2.

For visual mapping, textures that are repeated multiple times cannot be used as global constraints. In this scene, there are few corner features that are robust enough, and line features can only provide one direction constraint. Even more feature points cannot be obtained on a white wall, which can easily match errors and cause positioning errors.

1.5 Large changes in lighting in the scene

For pure visual solutions, lighting changes are also a difficult problem to solve. Generally, the warehousing and logistics environment is not only affected by light but also easily affected by natural light such as window skylights. In a dim environment, the visual sensor cannot extract enough information, and at the same time, it will be interfered with by noise, which will affect the positioning result. At the same time, during visual mapping and positioning, the relatively large difference in lighting conditions will also affect the positioning results; even the natural light of the window directly on the lens will cause the camera to be overexposed, and the inability to extract features will lead to positioning failure.

1.6 High positioning accuracy requirements

In the warehousing and manufacturing industry scenarios, robots need to be precisely aligned with the conveyor belt. In order to increase the storage density, the storage of goods is too dense, resulting in a narrow path for the robot, and the tolerance maybe a few centimeters. All these put forward higher accuracy requirements for robot positioning.

1.7 Unified scheduling of forklifts and mobile robots

The installation height of the forklift and the robot lidar is quite different, and the detection height is inconsistent. However, the map used by the forklift and the robot must have the same coordinate system during operation to ensure that the dispatch system uses the same point to control the robot. Therefore, it is necessary for forklifts and robots to have high consistency and absolute accuracy in mapping to ensure that different types of robots have the same position when picking and placing the same cargo position.

Solutions

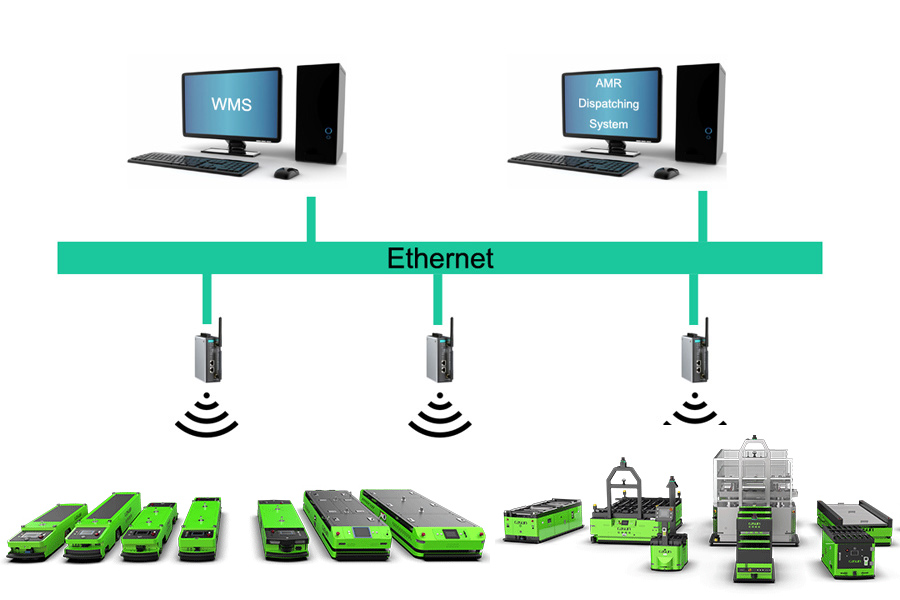

2.1 Hardware Architecture

In order to complete automated handling, a robot needs to design a complete set of data acquisition modules, data processing modules, and control planning modules. For different product lines, different task forms, and different sensor configurations including picking, handling, sorting, etc., it is necessary to rationally design the system hardware structure and modular software architecture to improve development efficiency, enhance system operation stability, and ultimately It can be flexibly applied to robots of different products.

The robot hardware composition mainly includes vehicle controller, battery module, sound and light alarm module, communication module, motor drive module, charging module, etc. At the same time, for the robots of different products and the actual operating environment, it also includes modules such as laser radar, fisheye camera, and depth camera related to positioning and perception. Through hardware architecture design, data collection and communication of different modules are completed. The corresponding data is sent to the corresponding processor, and after the processor has completed the processing, the processing result is sent to the corresponding control drive module to complete the corresponding task.

2.2 Software Architecture

The robot software architecture design completes the entire software system with high efficiency, flexibility, and reconfigurability by going from the underlying operating system to the algorithm platform, and then to the corresponding modularized positioning module, navigation module, obstacle avoidance module, sensor tight fusion module, and control module. The design was finally applied to robots of different product lines.

(1) Positioning and mapping fusion module

The positioning and mapping fusion module obtains the positioning and mapping results of each sub-module and optimizes the reprojection error of each module to minimize the positioning and mapping of the robot. Through the abstraction of data input and output interfaces, each sub-module is set into a flexible “pluggable” configuration, and the corresponding modules are deleted for the sensors of different product robots.

(2) Perception module

The perception module receives the semantics of each sub-module (laser perception module, visual perception module, ultrasonic perception module) and obstacle detection information.

(3) Path planning navigation and control module

The path planning module completes the planning and control of the robot according to specific tasks through the positioning of the structural positioning and mapping module, as well as the map information and the obstacles and semantic information of the perception module.

The robot collects data through the hardware architecture and completes the data communication between the modules through ROS2. Compared with ROS1, ROS2 adopts the data distribution service (DDS) communication protocol. It can deliver messages in a zero-copy manner, saving CPU and memory resources, and there is no single point of failure in ROS1, which better guarantees each module. High-efficiency and real-time data communication.

(4) SLAM system

In terms of the overall system design, the Simultaneous Localization And Mapping (SLAM) system adopts a tightly coupled, multi-source heterogeneous approach to use the information of various sensors and positioning elements. Among them, the front-view camera is used to identify feature points, semantic lines, two-dimensional codes, and various objects (Object). After the algorithm is accelerated on the GPU, the results are transmitted to the slave CPU for processing. The downward-looking camera can recognize the two-dimensional code on the ground to calculate the absolute position of the robot. This part of the algorithm will be optimized and accelerated on the FPGA. The information of the underlying odometer and IMU is read from the MCU and fusion and algorithm optimization are performed. Finally, after the information of each sensor is processed on different platforms by their respective algorithms, the results will be uniformly sent to the main CPU processor for the fusion of the results and the final decision.

The SLAM navigation technology of laser and vision fusion uses a leading tightly coupled multi-sensor fusion framework to achieve high-precision time synchronization from the processor chip to the lidar, camera, IMU, and encoder, ensuring that the time of the acquired sensor data is accurate. The key points of this technology include:

① Offline and online calibration

Before leaving the factory, the robot uses self-developed calibration equipment to strictly check the internal and external parameters of the sensor. With the online calibration algorithm, it can now achieve repeated and consistent motion trajectories for more than several months. No matter how the environment changes, the production line docking can be completed smoothly and accurately to ensure the sustainability of the production line.

② Laser distortion correction

At present, robots mostly use mechanical rotating lidar, and the acquisition time for each scan frame ranges from tens to hundreds of milliseconds. The laser scan frame is easily deformed when the robot is moving at high speed. The SLAM algorithm uses IMU and wheel speed data to perform motion compensation on the lidar scan frame, thereby solving the problem of trajectory deviation when the robot moves at high speed.

③ Dynamic update of online map

At present, SLAM uses sliding window optimization (Sliding Window BA) and marginalization (Marginalization) technologies to cut out “outdated” nodes and map observation data under the premise of ensuring map and positioning accuracy, and effectively control the scale of the graph (Graph). Through this industry-leading online map dynamic update technology innovation, the handling solution ensures the freshness of the map, truly realizes the dynamic environment perception and highly reliable positioning effect and ensures the long-term stable operation of the robot.

④ VX-SLAM positioning

The VX-SLAM system uses multiple levels of visual features. In the process of mapping, in addition to using traditional methods to detect points (Point), lines (Line), and regions (Region), the Instance Segmentation method is also used to segment and classify the objects in the field of view, and segment them according to the instance. As a result, dynamic objects are filtered out, and static objects are added as a constraint to the optimization of pose and map. The final map is a superimposed state of the point feature map, line feature map, object map, special area map, and other maps.

⑤ Unified scheduling of forklifts and robots

In order to achieve unified scheduling of forklifts and robots, it is necessary to integrate the SLAM map constructed by forklifts and robots. When creating a map, using the recognized features such as walls and columns, and aligning the coordinate systems of the two maps, the problem of different observable areas can be solved. In addition, the use of multi-sensor fusion positioning schemes and automated calibration schemes ensures that various types of robots have the same absolute accuracy.

(5) IntelliSense

① Visual obstacle avoidance

The safety of mobile robots is the most important part, which includes not only the safety of operators but also the safety of the robot itself. Due to the many changes in the storage scene, in the prescribed route, there may be obstacles that did not exist before, such as cargo boxes, falling goods, pedestrians, etc. The robot needs to recognize these obstacles in real-time to avoid danger.

Based on the above requirements, an obstacle detection method based on the combination of color images and depth images is designed. Color images focus on solving low or fixed objects, such as books, pedestrians, and cargo boxes. The depth map focuses on solving objects that are similar in color to the ground and have the obvious height or variable types, such as shelf legs, small wooden blocks, etc. The organic combination of the two methods can not only achieve robust obstacle recognition in a variety of scenarios but also achieve real-time requirements and obstacle recognition results.

② Intelligent identification of pallets

Intelligent pallet recognition is a very important link in unmanned forklifts. Its function is to accurately locate the end of the forklift to ensure that the forklift can accurately insert and pick up the goods. The camera installed on the forklift automatically recognizes the position of the pallet to calculate the relative position of the forklift and the pallet, so as to perform accurate pallet insertion, which can achieve higher insertion accuracy than manual. In the storage environment, pallet recognition has the following difficulties :

There are many types and sizes of pallets, and the existence of customized pallets;

The pallet position deviation may be very large;

There will be a lot of interference with pallets in the storage environment.

Based on the above difficulties, a special tray recognition algorithm is designed. The main implementation methods include tray detection based on deep learning and precise positioning of trays based on 3D segmentation. The pallet detection based on deep learning can adapt to complex storage scenarios, and can stably identify pallets even under complex pallet categories and interference, with high robustness. In order to achieve higher accuracy, after the deep learning method recognizes the pallet, the pallet needs to be accurately positioned based on the 3D point cloud, and the position of each column of the pallet can be accurately located according to the needs to realize ultra-high-precision pallet pose recognition.

Summary

In response to the problems faced by many links in the supply chain and warehousing, mobile robots, with their advantages of high flexibility, high precision, and high reliability, can greatly improve the efficiency of raw materials and finished products handling, and solve the challenges caused by the sharp increase in labor costs. It has been more in-depth and widely used.

Intelligent handling scenarios in the warehousing industry have the characteristics of human-computer interaction and high-frequency changes in the environment and require mobile robots with powerful hybrid navigation technology. In order to meet business needs, a complete set of efficient and flexibly configurable hardware systems are designed, and intelligent visual laser fusion perception and positioning, planning, and control systems are designed to improve the stability of AMR operation in complex and changeable scenes.